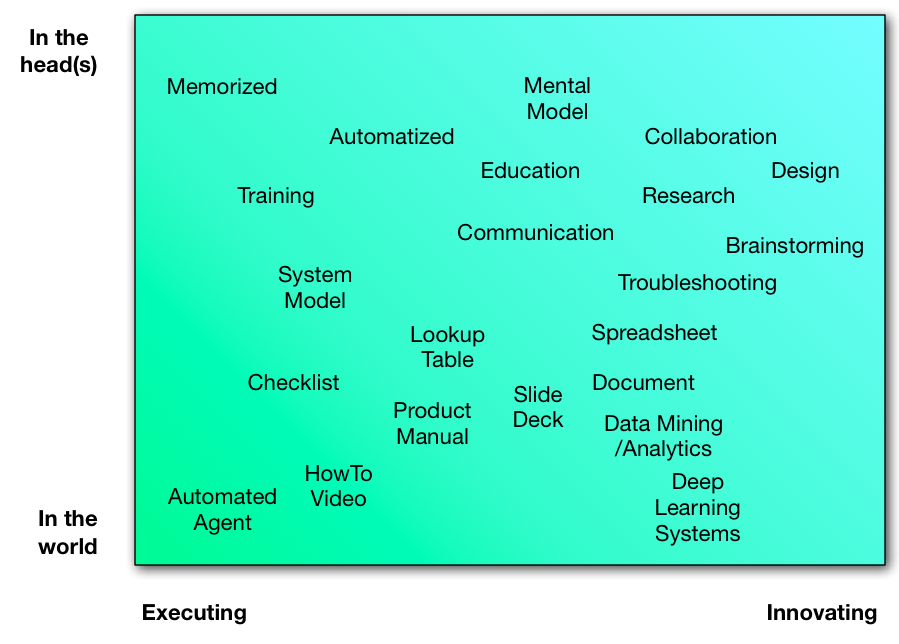

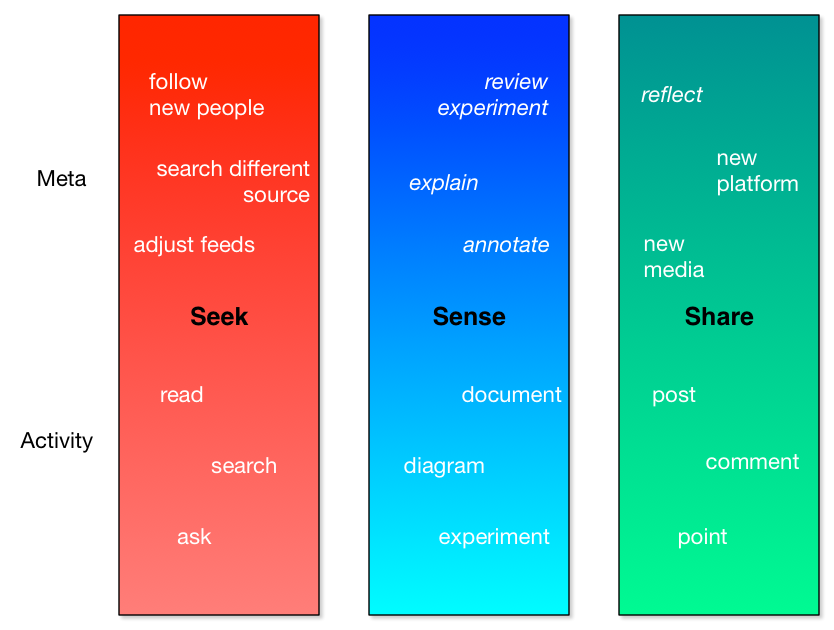

At a point some days ago, I got the idea to map out different activities by their role as executing versus innovating, and whether it’s in the head or in the world. And I’ve been playing with it since. I’m mapping some ways of getting work done, at least the mental aspects, across those dimensions.

I’m not sure I’ve got things in the right places. I’m not even sure what it really means. I’ve some ideas, but I think I’m going to try something new, and ask you what you think it means. So, what’s interesting and/or important here?