Instructional design, as is well documented, has it roots in meeting the needs for training in WWII. User experience (UX) came from the Human Computer Interaction (HCI) revolution towards User Centered Design. With a vibrant cross-fertilization of ideas, it’s natural that evolutions in one can influence the other (or not). It’s worth thinking about the trajectories and the intersections that are the roots of LXD, Learning eXperience Design.

I came from a background of computer science and education. In the job for doing the computer support for the office doing the tutoring I had also engaged in, I saw the possibilities of the intersection. Eager to continue my education, I avidly explored learning and instruction, technology (particularly AI), and design. And the relationships, as well.

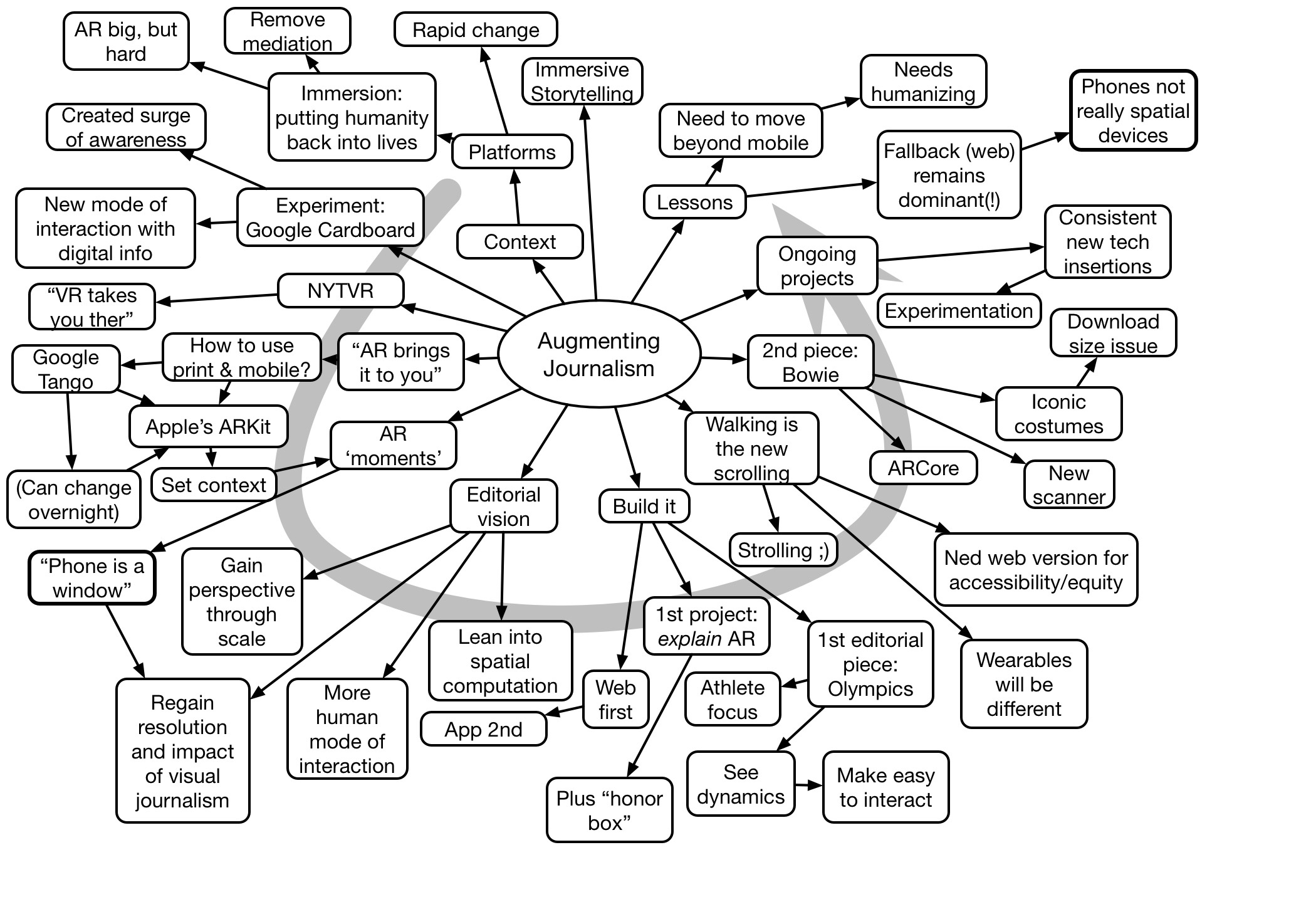

Starting with HCI (aka Usability), the lab I was in for grad school was leading the charge. The book User-Centered System Design was being pulled together as a collection of articles from the visitors who came and gave seminars, and an emergent view was coming. The approaches pulled from a variety of disciplines such as architecture and theater, and focused on elements including participatory design, situated design, and iterative design. All items that now are incorporated in design thinking.

At that time, instructional design was going through some transitions. Charles Reigeluth was pulling together theories in the infamous ‘green book’ Instructional Design Theories and Models. David Merrill was switching from Component Display Theory to ID2. And there was a transition from behavioral to cognitive ID.

This was a dynamic time, though there wasn’t as much cross-talk as would’ve made sense. Frankly, I did a lot of my presentations at EdTech conferences on implications from HCI for ID approaches. HCI was going broad in exploring a variety of fields to tap in popular media (a lot was sparked by the excitement around Pinball Construction Set), and not necessarily finding anything unique in instructional design. And EdTech was playing with trying to map ID approaches to technology environments that were in rapid flux.

These days, LXD has emerged. As an outgrowth of the HCI field, UX emerged with a separate society being created. The principles of UX, as cited above, became of interest to the learning design community. Explorations of efforts from related fields – agile, design thinking, etc, – made the notion of going beyond instructional design appealing.

Thus, thinking about the roots of LXD, it has a place, and is a useful label. It moves thinking away from ‘instruction’ (which I fear makes it all to easy to focus on content presentation). And it brings in the emotional side. Further, I think it also enables thinking about the extended experience, not just ‘the course’. So I’m still a fan of Learning Experience Design (and now think of myself as an LXD strategist, considering platforms and policies to enable desirable outcomes).

—

As a side note, Customer Experience is a similarly new phenomena, that apparently arose on it’s own. And it’s been growing, from a start in post-purchase experience, through Net Promoter Scores and Customer Relationship Management. And it’s a good thing, now including everything from the initial contact to post-purchase satisfaction and everything in between. Further, people are recognizing that a good Employee Experience is a valuable contributor to the ability to deliver Customer Experience. I’m all for that.