There’s been such a division between formal and informal; the fight for resources, mindspace, and the ability for people to get their mind around making informal concrete. However, I’ve been preparing a presentation from another way of looking at it, and I want to suggest that, at core, both are being driven from the same point: how humans learn.

I was looking at the history of society, and it’s getting more and more complex. Organizationally, we started from a village, to a city, and started getting hierarchical. Businesses are now retreating from that point of view, and trying to get flatter, and more networked.

Organizational learning, however, seems to have done almost the opposite. From networks of apprenticeship through most of history, through the dialectical approach of the Greeks that started imposing a hierarchy, to classrooms which really treat each person as an independent node, the same, and autonomous with no connections.

Certainly, we’re trying to improve our pedagogy (to more of an andragogy), by looking at how people really learn. In natural settings, we learn by being engaged in meaningful tasks, where there’re resources to assist us, and others to help us learn. We’re developed in communities of practice, with our learning distributed across time and across resources.

That’s what we’re trying to support through informal approaches to learning. We’re going beyond just making people ready for what we can anticipate, and supporting them in working together to go beyond what’s known, and be able to problem-solve, to innovate, to create new products, services, and solutions. We provide resources, and communication channels, and meaning representation tools.

And that’s what we should be shooting for in our formal learning, too. Not an artificial event, but presented with meaningful activity, that learners get as important, with resources to support, and ideally, collaboration to help disambiguate and co-create understanding. The task may be artificial, the resources structured for success, but there’s much less gap between what they do for learning and what they do in practice.

In both cases, the learning is facilitated. Don’t assume self-learning skills, but support both task-oriented behaviors, and the development of self-monitoring, self learning.

The goal is to remove the artificial divide between formal and informal, and recognize the continuum of developing skills from foundational abilities into new areas, developing learners from novices to experts in both domains, and in learning..

This is the perspective that drives the vision of moving the learning organization role from ‘training’ to learning facilitator. Across all organizational knowledge activities, you may still design and develop, but you nurture as much, or more. So, nurture your understanding, and your learners. The outcome should be better learning for all.

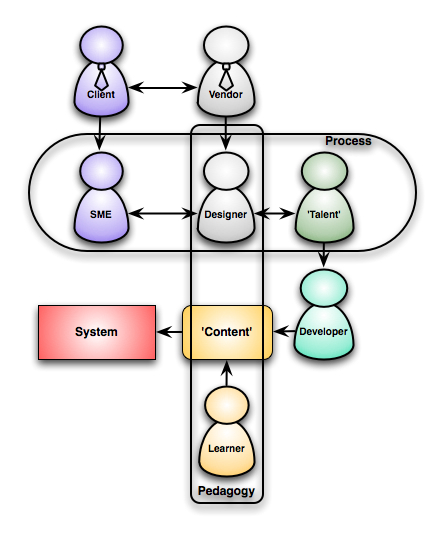

The point is, we have to quit looking at it as design, development, etc; and view it not just as a process, but as a system. A system with lots of inputs, processes, and places to go wrong. I tried to capture a stereotypical system in this picture, with lots of caveats: clients or vendors may be internal or external, there may be more than one talent, etc, it really is a simplified stereotype, with all the negative connotations that entails.

The point is, we have to quit looking at it as design, development, etc; and view it not just as a process, but as a system. A system with lots of inputs, processes, and places to go wrong. I tried to capture a stereotypical system in this picture, with lots of caveats: clients or vendors may be internal or external, there may be more than one talent, etc, it really is a simplified stereotype, with all the negative connotations that entails.