How do I drive myself? I was asked that in a coaching session. The question is asking how I keep learning. There are multiple answers, which I’ve probably talked about before, but I’ll reflect here. I think it’s important to regularly ask: “how do you drive yourself?”

As it’s the end of the year, my conversant was looking at professional development. It’s the time to ask for next year’s opportunities, and the individual was breaking out of our usual conversation to talk about this topic. And so he asked me what I did.

And my first response, which I’ve practiced consciously at least since grad school, is that I accept challenges. That is, I take on tasks that stretch me. (It might be that ‘sucker’ tattoo on my forehead, but note that my philanthropic bandwidth is pretty stretched. ;). This is professionally and personally.

That is, I look to find challenges that I think are within my reach, but not already my grasp. Or, to put it another way, in my Zone of Proximal Development. Accepting assignments or engagements where, with effort, I can succeed, but it’s not guaranteed.

Which means, of course, that there’s risk as well. Occasionally, I do screw up. Which I really really hate to do. Which is a driver for me to push out of my comfort zone and succeed. Or, at least, learn the lesson.

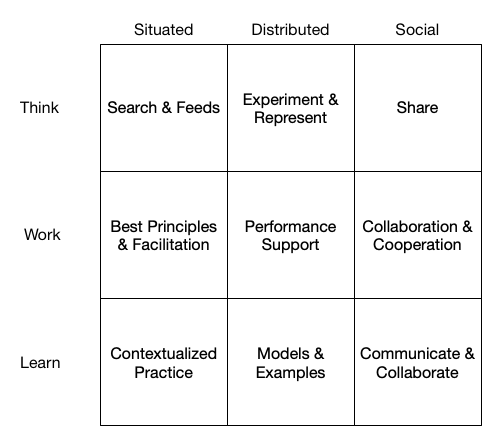

There’s more, of course. One thing I did started with my first Palm Pilot (the Palm III, the accompanying case is still my toiletry bag!). I had to justify to myself the expense, so I made sure that I really used it to success. This was part of the driver of the thinking that showed up in Designing mLearning, how to complement cognition. IA instead of AI, so to speak.

I also live the mantra “stay curious, my friends”. I’m still all too easily distracted by a new idea, but I don’t think that’s a bad thing. Well, as long as it’s balanced with executing against the challenges.

That’s how I drive myself. So, how do you drive yourself?