There are many phenomena associated with time. I know it was such a topic of my late friend Jay Cross that he named his vehicle for agency Internet Time. A few phenomena have arisen in my thinking, and it’s time to just review some of the reasons why timing matters.

So, for one, things seem to come in waves. It’ll be too quiet, and then too busy; too frenetic a rhythm. I might prefer a smoother rate, but…it’s not something we get to control. (At least, individually; societally we have chosen speed over quality, what with requirements for short-term returns.) Now, I do my best to cope, using the downtimes to work on background tasks. So that, of course, when it’s crunch time I feel like I can still function.

That was also true when I’ve struggled. For one, when I had back pain (before I got surgery), I had good days and bad. My solution was to get things done on the good days, so I was okay on the bad ones. That again is a coping strategy.

Overall, things are going faster than we’d like. We are dealing with increasing change, and increasing information. Things like Harold Jarche’s Personal Knowledge Mastery (PKM) are tools we increasingly need to use, to be able to adapt.

Timing matters in other ways, too. Some times the time has to be right for an idea to stick. I regularly tell the story about how I mentioned the concept of ‘explorable interfaces’ (from Jean-Marc Robert) to my PhD supervisor, to no avail. Which surprised me, because our lab was looking at usability! However, I brought the concept up a few years later, and suddenly it was a great idea! Same idea, but right time.

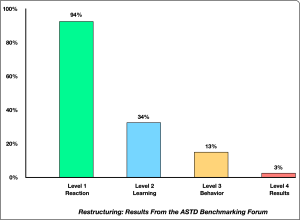

It’s true for learning as well. It’s not something we can rush! Yet we try to have an event and then move on, not recognizing learning takes time. We need repeated reactivation and feedback for learning to occur, yet that’s seldom the design of our organizational solutions. Yet, technology gives us the ability to address this, if only we have the will.

And, we may need to make sure it’s the right time. We need to understand why something’s relevant to us before we’re ready for the message. That’s something I think we don’t focus on enough, hence my most recent tome.

We live in interesting times, as the saying has it. There’s little reason to believe things will slow down, even if they should. So, we need to be able to deal with it. Personally, and for our learners. Timing matters, as does how we deal with it. What are your strategies?