As part of a push for Learning Engineering, Carnegie Mellon University recently released their learning design tools. I’ve been aware of CMU’s Open Learning Initiative for a suite of reasons, and their tools for separate reasons. And I think both are good. I don’t completely align with their approach, but that’s ok, and I regularly cite their lead as a person who’s provided sage advice about doing good learning design. Further, their push, based upon Herb Simon’s thoughts about improving uni education, is a good one. So what’s going on, and why?

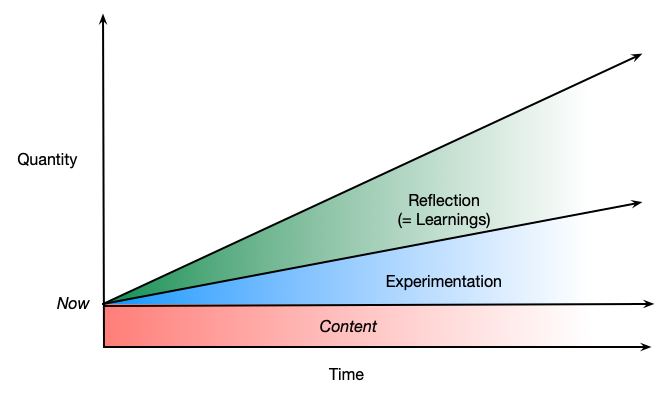

First, let’s be fair, most uni learning design isn’t very good. It’s a lot of content dump, and a test. And, yes, I’m stereotyping. But it’s not all that different from what we see too often in corporate elearning. Not enough practice, and too much content. And we know the reasons for this.

For one, experts largely don’t have access to what they do, consciously, owing to the nature of our cognitive architecture. We compile information away, and research from the Cognitive Technology Group at the University of Southern California has estimated that 70% of what experts do isn’t available. They literally can’t tell you what they do! But they can tell you what they know. University professors are not only likely to reflect this relationship, they frequently may not actually be practitioners, so they don’t really do! We’ve compounded the likely focus on ‘know’, not do.

And, of course, most faculty aren’t particularly rewarded for teaching. Even lower tiers on the Carnegie scale of research institutions dream and hire on the potential for research. There may be lip service to quality of teaching but if you can publish and get grants, you’re highly unlikely to be let go without some sort of drastic misstep.

And the solution isn’t, I suggest, trying to get faculty to be expert pedagogues. I suggest that the teaching quality of an institution is perceived, except perhaps the top tier institutions, as a mark of the quality of the institution. And yet the efforts to make teaching important, supported, valued, etc, tends to still be idiosyncratic. Yes, many institutions are creating central bodies to support faculty in improving their classes, but those folks are relatively powerless to substantially change the pedagogy unless they happen to have an eager faculty member.

CMU’s tools align, largely, with doing the right thing, and this is important. The more tools that make it easy to do the right thing, rich pedagogies, the better. It makes much more sense, for instance, to have a default be to have separate feedback for each wrong answer than the alternative. Not that we always see that…but that’s an education problem. We need faculty and support staff to ‘get’ what good learning design is.

Ultimately, this is a good push forward. Combined with greater emphasis on teaching quality, even a movement towards competencies, and rigor in assessment, there’s a hope to get meaningful outcomes from our higher education investment. What I’ve said about K12 also holds true for higher ed, it’s both a curriculum and a pedagogy problem. But we can and should be pushing both forward. Here’s to steps in the right direction!