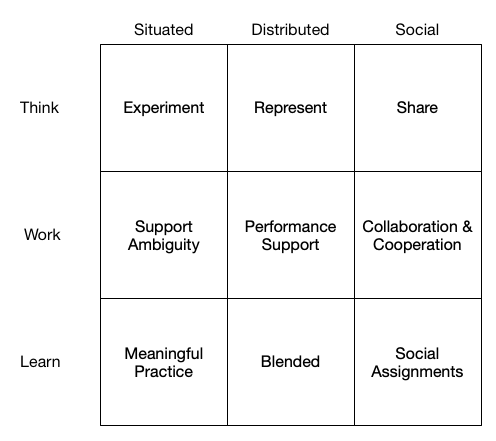

So, in my last post, I talked about exploring the links between cognitions on the one hand (situated, distributed, social), and contexts (aligning with how we think, work, & learn). I did it one way, but then I thought to do it another, to instead consider Contexts by Cognitions, to see if I came to the same elements. And they weren’t quite identical! So I thought I should share that thinking, and then come to a reconciliation. Thinking out loud, as it were.

So in this one, I swapped the headings, emptied the matrix, and took a second stab at filling them out, with a relatively clear mind. (I generated the first diagram several days ago and had been iterating on it, but not today. Today I was writing it up and was early in the process, so I came to it relatively free of contamination. And of course, not completely, but this is ‘business significance’, not ‘statistical significance’ ;). The resulting diagram appears similar, but also some differences.

So in this one, I swapped the headings, emptied the matrix, and took a second stab at filling them out, with a relatively clear mind. (I generated the first diagram several days ago and had been iterating on it, but not today. Today I was writing it up and was early in the process, so I came to it relatively free of contamination. And of course, not completely, but this is ‘business significance’, not ‘statistical significance’ ;). The resulting diagram appears similar, but also some differences.

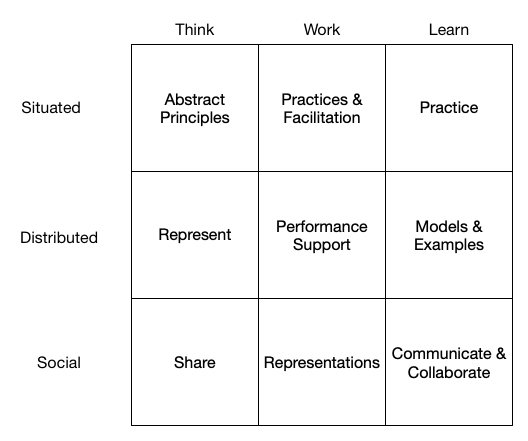

When we consider Thinking by Situated, we’re talking about coping with emergent situations. I thought being guided by best principles would be the way to cope, abstracted models. I thought representation was key for distributing one’s thinking, and sharing of course for social.

Working Situatedly suggested having in-house practices and facilitation. Of course, Distributed support for Work is performance support. And working socially suggests shared representations.

Finally, learning situated suggests the need for much practice (across contexts, I now think). Distributed support for learning are models and examples. And social learning suggests communicating (e.g. discussions) and collaboration (group projects).

Interestingly, these results differ from my previous post. So, I think I’ll have to reconcile them. The fact that I did get different results, and it sparked some additional thinking, is good. The outcome of considering contexts by cognitions improved the outcomes, I think. And that’s worth thinking about!