I maintaining a fascination in design, for several reasons. As Herb Simon famously said: “The proper study of mankind is the science of design.” My take is to twist the title of Henry Petroski’s book, To Engineer is Human into ‘to design is human’. To me, design is both a fascinating study in cognition, and an area of application. The latter of which seems to be flourishing!

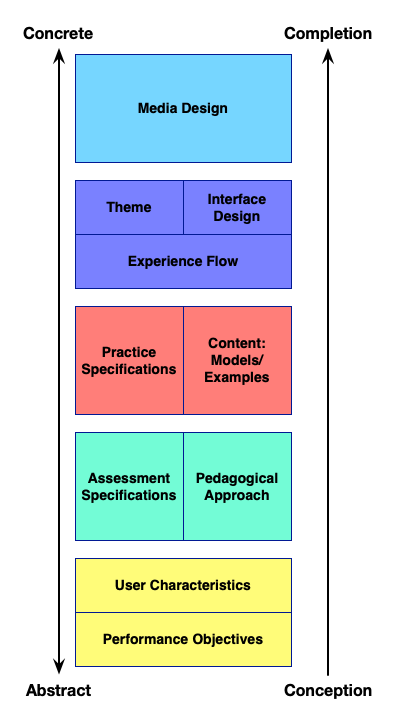

I’ve talked in the past about various design processes (and design overall, a lot). As we’ve moved from waterfall models like the original ADDIE, we’ve shifted to more iterative approaches. So, I’ve mentioned Michael Allen’s SAM, Megan Torrance’s LLAMA, etc.

And I’ve been hit with a few more! Just in the past few days I’ve seen LeaPS and EnABLE. They’re increasingly aware of important issues in learning science. All of this is, to me, good. Whether they’re just learning design approaches, or more performance consulting (that is, starting with a premise that a course may not be the answer), it’s good to think consciously about design.

My interest in design came in a roundabout way. As an undergrad, I designed my own major on Computer-Based Education, and then got a job designing and programming educational computer games. What that didn’t do, was teach me much about design as a practice. However, going back to grad school (for several reasons, including knowing that we didn’t have a good enough foundation for those game designs) got me steeped in cognition and design. Of course, what emerges is that they link at the wrists and ankles.

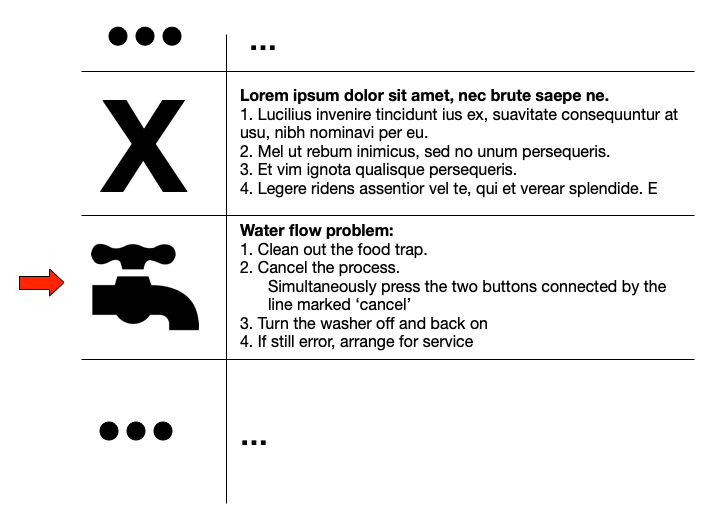

So, my lab was studying designing interfaces. This included understanding how we think, so as to design to match. My twist was to also design for how we learn. However, more implicitly than explicitly perhaps, was also the topic of how to design. Just as we have cognitive limitations as users, we have limitations as designers. Thus, we need to design our design processes, so as to minimize the errors our cognitive architecture will introduce.

Ultimately, what separates us from other creatures is our ability to create solutions to problems, to design. I know there’s now generative AI, but…it’s built on the average. I still think the superlative will come from people. Knowing when and how is important. Design is really what we want people to do, so it’s increasingly the focus of our learning designs. And it’s the process we use to create those solutions. Underpinning both is how we think, work, and learn.

To design is human, and so we need to understand humans to design optimally. Both for the process, and the product. This, I think, makes the case that we do need to understand our cognitive architecture in most everything we do. What do you think?

FWIW, I’ll be talking about the science of learning at DevLearn. Hope to see you there.